Do we need Sitemaps?

If you are old enough to remember the days when websites used to have an ugly and static HTML structure, with no dynamic elements whatsoever, you can probably recall that a sitemap used to be an essential part of building a website. Back then, a sitemap was a clean HTML page that had to be created manually in an HTML editor, listing all the URLs to all the pages on the website. Many people think that this practice is history and that today the sitemap is no longer needed. In this article, we are going to show you what a sitemap is, and why you need it.

A long time ago, as we have already mentioned, sitemaps had to be created separately, which was a lot of extra work. The sitemap had to be manually updated when new pages were added, or unwanted pages were deleted. Today, the vast majority of websites are WordPress-powered and sitemaps can now be generated automatically, either using a third-party plugin or a custom-made script.

There are two types of sitemaps:

- HTML sitemap – a simple web page containing links that lead to all pages, which can be viewed by both visitors and search engine bots.

- XML sitemap – a structured file that contains all of the internal URLs from the website. This file is designed solely for search engines, to crawl and index websites in full.

How does it work?

Let’s say you’ve just created a new post. When you run a third-party plugin on your server to generate the sitemap (static or .xml), the plugin automatically adds the new URL to the sitemap, using the title of the page as anchor text. This whole process runs in the background, and unless anything goes seriously wrong, the sitemap file requires zero maintenance. If you are using WordPress and decide to migrate your blog to another server, backing up the sitemap and restoring it on the other hosting server will take just a few seconds.

What are sitemaps useful for?

As you might have guessed, a sitemap allows Google (or other search engines) to crawl your entire site in one go. “Deep links” (pages that take more than 2-3 clicks to reach) might not be indexed simply because Googlebots visits are quite brief. If most of your content is not easily accessible from the homepage or sitemap, during that short visit, it may index only a very small (“visible” or easily accessible) portion of the website (not your entire site).

If a large portion of your website is not indexed, adding a sitemap will deal with this problem. But, since there two types of sitemaps, HTML and .xml (even though today the term is used interchangeably), they are also used differently.

A link to the traditional HTML sitemap is generally added to the footer of your website. While it may have SEO benefits, it will primarily be used by your visitors for a quick overview of your complete website. On the other hand, using a link for your .xml sitemap is optional, as this file is used strictly for search engine bots.

How to use sitemap.xml for search engine optimization?

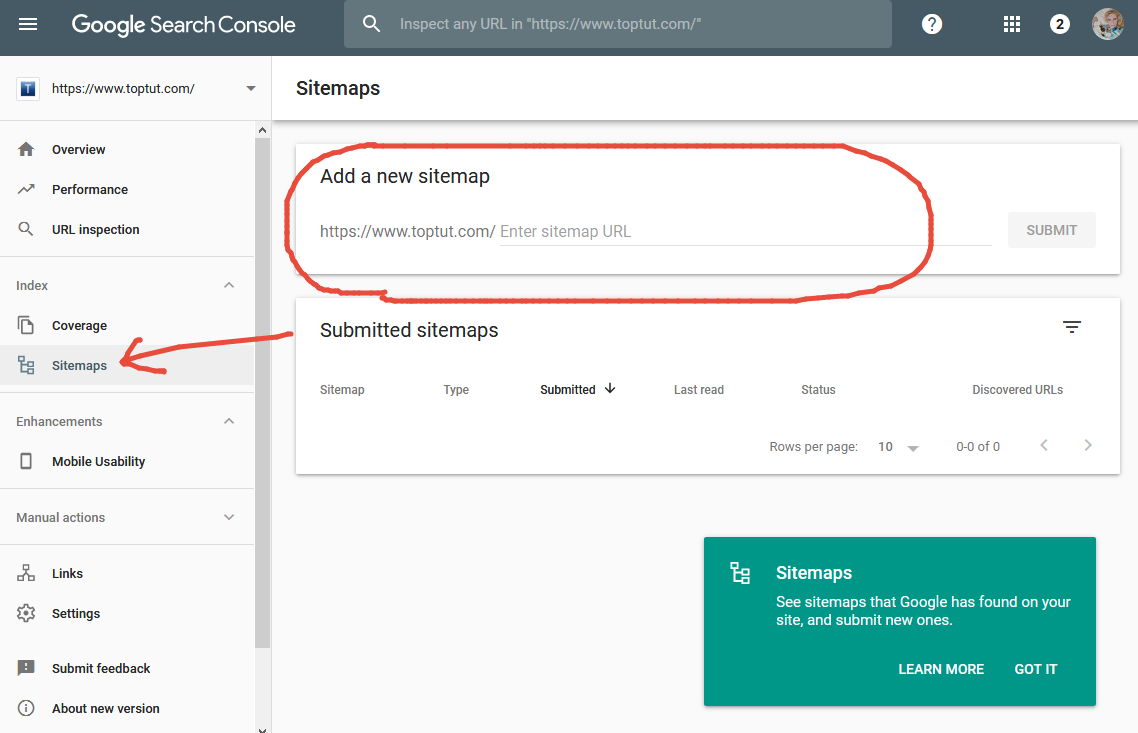

If you are using Google search console, you have the option of submitting your sitemap file directly from your user panel. This way you can sleep better at night, knowing that Google is keeping up with your website. Essentially, this ensures that all the internal pages of your site are submitted to Google for crawling.

How to submit your sitemap using Google Search Console (GSC)?

1. Login to your Google search console account.

2. Select the website you want to submit a sitemap for.

3. Select “crawl” on the left sidebar and then choose “sitemaps.” If there is already an old sitemap (perhaps a relic HTML version from 2005) – remove it.

4. On the top right corner, click “add/test sitemap.”

5. Add your new sitemap and click “submit.”

How to ensure my sitemap is indexed?

Apart from submitting your sitemap directly using the Google search console tool, you should also add your sitemap to the robots.txt file. This file gives Googlebots “instructions” as to what it should crawl or what it should skip. The robots.txt file is always located in your root directory so that it can be accessed from https://yourdomain.com/robots.txt. If you are using WordPress, your typical robots.txt should look like this:

User-agent: * Disallow: / wp-admin / Allow: /wp-admin/admin-ajax.phpSitemap: https://www.yourdomain.com/sitemap_index.xml

If you run the standard WordPress installation on your server, a generic robots.txt file is automatically created, though you can choose what to add under the “allow:” or “disallow:” sections later on. Robots.txt can give crawling guidance to all search bots or particular bots: for example only Googlebots or Bingbots.

Should you consider other Search Engines?

Of course, other search engines, such as Bing and Yahoo! also have their own “webmaster tools” and their own platforms for submitting your website’s sitemap. I bet you didn’t know that! If you have a lot of spare time and nothing else to do, by all means, do submit your website to other search engines; it definitely won’t do any damage. Generally speaking, if you can make it to the first page in Bing or Yahoo!, you will have no problem succeeding in Google.

Should you use online sitemap generators?

For those of you who have static web pages and do not use WordPress or any other content management system, the sitemap file is going to be an extra step in the website’s creation. Since there is no on-site script to generate your sitemap automatically, you will have to do it manually. Luckily, you can use any free online sitemap generator. Bear in mind though, that once the structure of your website changes and you add or delete pages, you will have to re-generate your sitemap again and re-submit it to the Google search console.

Does a sitemap guarantee my content will be indexed?

After outlining all the SEO benefits of creating a sitemap, you may conclude that a sitemap guarantees your entire website will be indexed. In short, sadly, the answer is no. Why? First of all, Google is picky and does not index all the pages it stumbles upon.

Why doesn’t a sitemap guarantee improvement to your ranking? Unfortunately, with the enormous expansion of the internet, Google’s algorithm evolves and continuously develops, to filter and combat spammy web pages. A sitemap can only ensure your entire website will be crawled, but crawling does not guarantee that your site will be indexed.

If Google’s artificially intelligent algorithm considers that the quality of your content is worth indexing, it will do so.

Conclusion:

Just like with any other search engine optimization practices, the more guidelines you carefully follow, the better. A sitemap is no exception. Keep both HTML and .xml sitemaps on your website up to date, submit the .xml sitemap to Google search console, don’t forget to add it under the “allow:” parameter in the robots.txt file, and like with any other SEO method – hope for the best. Good luck!